Activity 20: Neural Networks

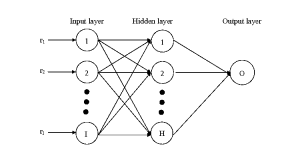

A neural network is a mathematical model based on the workings of the human brains. It is composed of neurons arranged in layers that is fully connected with the preceding layer. The layers are called the input, hidden and output layers respectively. The input layer is where the input features are fed and forwarded to the hidden layer which is again forwarded to the output layer.

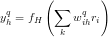

After the neurons are placed in the input, they are fed forward to the hidden neurons. The hidden neurons would have value  where

where  are the weights (strength of connection) association with the ith and hth neuron.

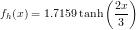

are the weights (strength of connection) association with the ith and hth neuron.  is called the activation function because it mimics the the all-or-nothing firing of the neurons. Both hidden and output layers have activation functions given by

is called the activation function because it mimics the the all-or-nothing firing of the neurons. Both hidden and output layers have activation functions given by

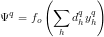

The tansig function is one of the most often used activation functions is pattern recognition problems. The output of the neural network is given by

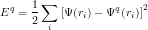

where  is the weight associated with the hidden and output layers. The goal of NN is to minimize the error function E given by

is the weight associated with the hidden and output layers. The goal of NN is to minimize the error function E given by

where  is the actual output of the fed input pattern. This neural network is also called supervised neural network because you have an idea of what the output is. In a way, you can also look at it as forcing the NN to adjust weights such that a given input has the desired output.

is the actual output of the fed input pattern. This neural network is also called supervised neural network because you have an idea of what the output is. In a way, you can also look at it as forcing the NN to adjust weights such that a given input has the desired output.

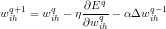

The weights  are updated as

are updated as

where  is the weight at the qth iteration. A similar process is done for

is the weight at the qth iteration. A similar process is done for  .

.

As a note, a neural network may have more than one output neuron. For a 3-class classification, we can have group A would output 0-0, group B would have 0-1 and group C would have 1-1. It is also important to normalize the inputs to between 0 and 1 because sigmoidal functions plateus at high values. That is, tansigmoidal of 100 is similar to tansigmoidal 200. Finally, the output of NN would not always be 1s or 0s. Thus, some sort of thresholding is done, like if the output is greater then 0.5 it is 1, otherwise 0.

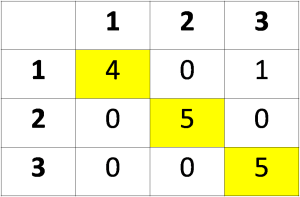

For my classification my results are as follows.

This has accuracy of 93.3%. Also, the training set has 100% classification results. My code written in matlab is as follows:

net=newff(minmax(training),[25,2],{‘tansig’,’tansig’},’traingd’);

net.trainParam.show = 50;

net.trainParam.lr = 0.01;

%net.trainParam.lr_inc = 1.05;

net.trainParam.epochs = 1000;

net.trainParam.goal = 1e-2;

[net,tr]=train(net,training,training_out);

a = sim(net,training);

a = 1*(a>=0.5);

b = sim(net,test);

b = 1*(b>=0.5);

diff = abs(training_out – b);

diff = diff(1,:) + diff(2, :);

diff = 1*(diff>=1);

Leave a comment