Activity 19: Linear Discriminant Analysis

Linear discriminant analysis is one of the simplest classification techniques to implement because it enables us to create a discriminant function from predictor variables. In this activity, i tried classifying between nova and piatos. The features i used where their RGB values and size.

The f values are given as follows

f1 f2

1 636.89 593.53

1 588.54 551.74

1 587.14 561.56

1 553.42 515.54

2 340.98 392.78

2 409.91 434.11

2 438.59 474.51

2 431.21 462.89

An object is assigned a class with the highest f value. As can be seen, most of group 1 have high f1 values while those in group 2 have higher f2 values.

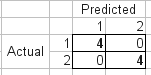

As can be seen in our confusion matrix, we have 100% classification

Here is my code:

clear all;

chdir(‘/home/jeric/Documents/Acads/ap186/AC18’);

exec(‘/home/jeric/scilab-5.0.1/include/scilab/ANN_Toolbox_0.4.2.2/loader.sce’);

exec(‘/home/jeric/scilab-5.0.1/include/scilab/sip-0.4.0-bin/loader.sce’);

stacksize(4e7);

getf(‘imhist.sce’);

getf(‘segment.sce’);

training=[];

test=[];

prop=[];

loc=’im2/’;

f=listfiles(loc);

f=sort(f);

[x1, y1]=size(f);

for i=1:x1;

im=imread(strcat([loc, f(i)]));

im2=im2gray(im) ;

if max(im2)<=1

im2=round(im2*255);

end

[val, num]=imhist(im2);

im2=im2bw(im2, 210/255);

im2=1-im2;

ta=sum(im2); // theoretical area

[x,y]=find(im2==1);

temp=im(:,:,1);

red=mean(temp(x));

temp=im(:,:,2);

green=mean(temp(x));

temp=im(:,:,3);

blue=mean(temp(x));

prop(i,:)=[red, green, blue, ta];

end

max_a=max(prop(:,4));

max_r=max(prop(:,1));

max_g=max(prop(:,2));

max_b=max(prop(:,3));

prop(:,4) = prop(:,4)/max_a;

prop(:,1) = prop(:,1)/max_r;

prop(:,2) = prop(:,2)/max_g;

prop(:,3) = prop(:,3)/max_b;

training = prop(1:2:x1,:);

test = prop(2:2:x1, :);

m1 = mean(training(1:4,:),1);

m2 = mean(training(5:8,:),1);

training1 = training(1:4, :);

training2 = training(5:8,:);

training1 = training1 – mtlb_repmat(m1, 4,1);

training2 = training2 – mtlb_repmat(m2, 4,1);

//LDA

n1 = 4;

n2 = 4

c1 = (training1)’ * training1 / n1;

c2 = (training2)’ * training2 / n2;

c = 1./8*(4*c1+4*c2);

p = [1./2; 1./2];

for i = 1:8

f1(i) = m1*inv(c)*training(i,:)’ – 0.5*m1*inv(c)*m1’+log(p(1));

f2(i) = m2*inv(c)*training(i,:)’ – 0.5*m2*inv(c)*m2’+log(p(2));

if f1(i)>f2(i) then

class(i)=1;

else class(i)=2;

end

end

class

you may look take a look at http://people.revoledu.com/kardi/tutorial/LDA/LDA.html for a more thorough discussion of LDA.

Leave a comment